Hi DGL team,

I want to ask about the incidence matrix of HGNN example here Hypergraph Neural Networks — DGL 1.0.3 documentation.

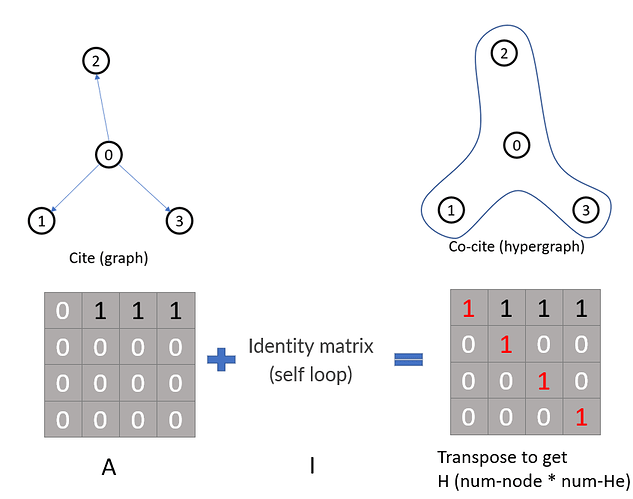

In the Loading Data part with Cora dataset, you use its adjacency matrix plus the self-loops to get the incidence matrix of the co-citation hypergraph. If I understand correctly, I think you may have to transpose for the final matrix to get the incidence matrix (num_nodes * num_hyperedges)?

Looking forward to your insights.